Revolutionizing Kubernetes Deployment: How Karios Transforms Infrastructure Orchestration

Revolutionizing Kubernetes Deployment: How Karios Transforms Infrastructure Orchestration

The deployment and management of Kubernetes clusters has long represented one of the most significant operational challenges in modern infrastructure management. Organizations investing in container orchestration frequently encounter deployment timelines measured in days or weeks, requiring specialized expertise and extensive manual configuration.

Karios fundamentally transforms this paradigm, delivering the industry’s first hypervisor platform with fully automated Kubernetes deployments, across several of the most prominent Kubernetes solutions, including Sidero Labs Omni, reducing deployment timelines from days to minutes.

As the most complete infrastructure management platform and hypervisor in the world, Karios uniquely integrates fully automated physical server provisioning with advanced virtualization, Kubernetes orchestration, and integrated observability through Grafana’s Prometheus, eliminating the traditional barriers between physical infrastructure and containerized applications.

This whitepaper introduces a new paradigm: Zero-Trust Infrastructure in next-generation hyperconverged systems. Unlike legacy solutions, security is not an add-on; it is embedded into the operating system, hypervisor, and hardware. With integrated vulnerability scanning, compliance reporting, and audit trails woven directly into the infrastructure fabric, organizations gain continuous assurance while achieving operational simplicity, scalability, and efficiency.

The Kubernetes Deployment Challenge

Kubernetes has established itself as the de facto standard for container orchestration, yet its deployment complexity remains a persistent barrier to adoption. Traditional Kubernetes implementations require organizations to navigate multiple layers of technical complexity:

- Physical Server Provisioning: Organizations must first provision and configure physical server infrastructure, a process traditionally requiring manual BIOS configuration, operating system installation, network configuration, and storage array setup. This foundational layer alone can consume days of engineering time before Kubernetes deployment even begins.

- Infrastructure Layer Preparation: Following physical server provisioning, teams must establish the underlying compute, storage, and networking infrastructure. Capacity planning, hardware configuration, and hypervisor deployment create additional timeline extensions and potential points of failure.

- Cluster Bootstrapping: The initialization of Kubernetes control plane components, including the API server, scheduler, controller manager, and etcd, requires precise configuration and coordination. Certificate management, authentication configuration, and network policy establishment add substantial complexity layers that demand specialized expertise.

- Node Configuration and Management: Worker nodes must be individually configured with container runtimes, kubelet services, and appropriate networking plugins. Ensuring consistency across nodes while maintaining security postures demands rigorous attention to configuration management and version control.

- Network and Storage Integration: Implementing Container Network Interface (CNI) plugins and Container Storage Interface (CSI) drivers requires deep technical knowledge and careful integration testing. Network policy enforcement, service mesh configuration, and persistent storage provisioning introduce additional configuration requirements that extend deployment timelines.

- High Availability Configuration: Production-grade deployments necessitate multi-master architectures, load balancer configuration, and distributed etcd clusters. Achieving true high availability requires careful architectural planning, extensive testing, and sophisticated orchestration across multiple failure domains.

- Observability and Monitoring: Establishing comprehensive monitoring, metrics collection, logging aggregation, and alerting infrastructure represents another substantial undertaking. Organizations must deploy and configure monitoring solutions, establish dashboards, define alert thresholds, and integrate with incident management systems, work that can add days to the deployment timeline.

- Operational Readiness: Beyond initial deployment, organizations must implement backup strategies, disaster recovery procedures, and operational runbooks work that extends the timeline to production readiness considerably.

This complexity typically necessitates teams of specialized engineers spending days or weeks on initial deployment, followed by ongoing operational overhead for maintenance, updates, and troubleshooting. The knowledge gap between infrastructure teams and Kubernetes expertise creates organizational friction, delays time-to-value for containerized applications, and increases total cost of ownership substantially.

The Karios Solution: Unified Automation from Physical Servers to Kubernetes

Karios represents a fundamental reimagining of infrastructure management, delivering the world’s first hypervisor with fully automated Kubernetes deployment capabilities integrated seamlessly with physical server provisioning.This comprehensive approach eliminates the traditional segmentation between physical infrastructure, virtualization, and container orchestration, providing a unified platform that manages the complete stack.

The Next-Gen Hyperconverged Solution

The Karios Core platform embeds zero-trust security with Karios Shield, and includes compliance capabilities built directly into the operating system, hypervisor, and hardware layers, delivering continuous assurance by design.

Key Differentiators:

- Automated Discovery and Enrollment: Physical servers are automatically discovered and enrolled into the Karios platform, eliminating manual registration and configuration processes.

- Intelligent Provisioning: Operating system deployment, network configuration, and storage initialization occur automatically according to workload requirements and organizational policies.

- Unified Management: Physical server resources, virtualized infrastructure, and containerized workloads are managed through a single unified interface, eliminating the operational complexity inherent in multi-platform approaches.

- Fully Automated Kubernetes Deployment: Building upon its comprehensive physical server provisioning foundation, Karios delivers unprecedented Kubernetes automation

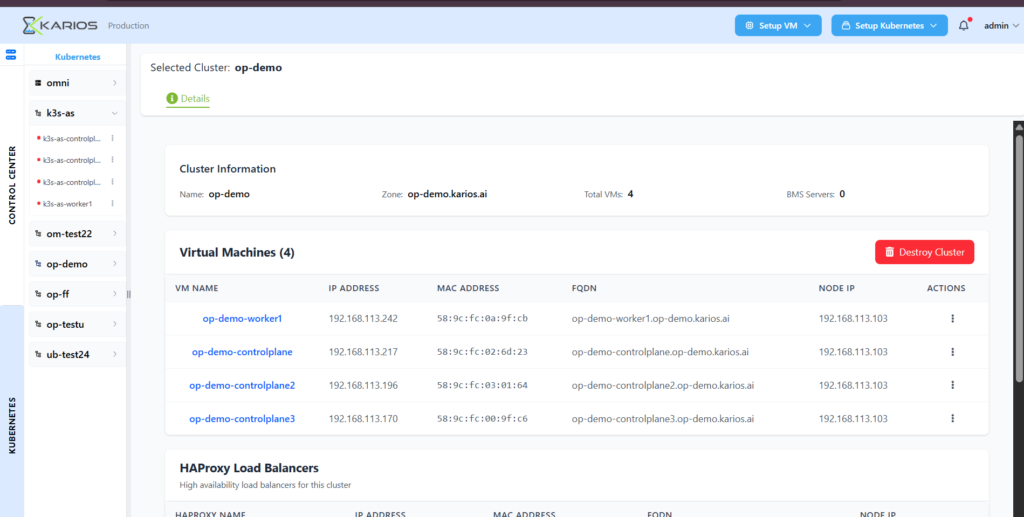

- One-Click Deployment: Karios provides fully automated Kubernetes cluster provisioning through an intuitive interface. Infrastructure teams can deploy production-grade Kubernetes clusters in minutes rather than days, with all necessary components, control plane, worker nodes, networking, and storage configured automatically according to industry best practices.

- Intelligent Resource Allocation: The platform’s integrated DCIM capabilities enable intelligent workload placement and resource optimization across physical server and virtualized infrastructure. Karios automatically provisions the necessary compute and storage resources from physical hardware through to Kubernetes namespaces, eliminating manual infrastructure preparation at every layer.

- Automated High Availability: Multi-master configurations, load balancing, and distributed control plane deployments are established automatically across physical and logical failure domains, ensuring production-grade reliability without manual intervention.

- Integrated Security: Security configurations spanning physical server firmware, hypervisor hardening, certificate management, RBAC policies, and network segmentation are implemented automatically according to industry best practices and compliance requirements.

- Lifecycle Management: From initial provisioning through ongoing operations, updates, and decommissioning, Karios manages the complete infrastructure lifecycle automatically, reducing operational overhead and minimizing security exposure windows.

- Integrated Observability with Grafana’s Prometheus: Karios distinguishes itself further by including fully integrated observability capabilities through Grafana’s Prometheus metrics system, eliminating yet another deployment complexity that traditionally requires days of configuration:

- Automatic Metrics Collection: Prometheus integration is configured automatically during Kubernetes deployment, immediately capturing comprehensive metrics from control plane components, worker nodes, container workloads, and underlying physical server infrastructure.

- Pre-Configured Dashboards: Grafana dashboards are deployed automatically with production-ready visualizations covering cluster health, resource utilization, application performance, and infrastructure metrics across the entire stack from physical servers to containerized applications.

- Unified Visibility: Organizations gain immediate visibility into their complete infrastructure—physical server performance, virtualization metrics, Kubernetes cluster health, and application behavior—through a single integrated observability framework.

- Automated Alerting: Alert rules are established automatically based on industry best practices, providing immediate notification of potential issues across the infrastructure stack without requiring manual threshold configuration.

- Historical Analysis: Prometheus’ time-series database provides comprehensive historical data retention, enabling trend analysis, capacity planning, and performance optimization across physical server infrastructure and Kubernetes workloads.

This integrated observability eliminates the substantial deployment and configuration effort typically required to establish production-grade monitoring for Kubernetes environments, further accelerating time-to-value and reducing operational complexity.

Sidero Labs Integration: Best-of-Breed Kubernetes

Karios stands as the only platform in the world to achieve full integration with Sidero Labs Omni, delivering among the most advanced Kubernetes implementations available within the most complete infrastructure management platform ever developed.

This integration represents a significant technological achievement, combining Sidero Labs’ expertise in Kubernetes lifecycle management with Karios’ comprehensive infrastructure automation spanning physical server infrastructure through containerized applications.

Sidero Labs Omni provides enterprise-grade Kubernetes management with sophisticated lifecycle automation, declarative cluster management, and robust security controls. The integration within Karios delivers unprecedented capabilities:

- Unified Stack Management: Organizations gain a single platform managing the complete infrastructure stack, from physical server provisioning through virtualization and Kubernetes orchestration, eliminating the fragmentation, integration complexity, and vendor management overhead inherent in multi-vendor approaches.

- Automated Lifecycle Management: Cluster updates, security patches, and version upgrades are managed automatically through Sidero Labs’ proven automation framework, reducing operational overhead and security risk across the entire infrastructure stack.

- Declarative Operations: Infrastructure and application teams can define desired states declaratively across all layers, physical server resources, virtual machines, and Kubernetes clusters, with Karios and Sidero Labs ensuring continuous compliance with specified configurations.

- Enterprise Scalability: The integrated solution scales seamlessly from departmental deployments to global infrastructure, supporting thousands of nodes across multiple geographic locations while maintaining consistent management and operational frameworks.

- Best-of-Breed Integration: Sidero Labs’ reputation for delivering sophisticated, production-grade Kubernetes implementations combines with Karios’ comprehensive platform capabilities to provide an unmatched solution for organizations requiring enterprise-scale container orchestration.

Competitive Differentiation

The combination of fully automated physical server provisioning, comprehensive infrastructure management capabilities, fully automated Kubernetes deployment, and integrated observability distinguishes Karios from all conventional approaches:

- Traditional Hypervisors (VMware, Nutanix, Hyper-V): Provide virtualization capabilities but lack automated physical server provisioning and require separate tools with extensive manual processes for Kubernetes deployment, monitoring configuration, and management. These platforms assume pre-provisioned infrastructure and leave organizations managing fragmented toolchains.

- Lightweight Virtualization Platforms (Proxmox): Offer basic compute virtualization but lack automated physical server provisioning, integrated Kubernetes orchestration, native observability, DCIM capabilities, and enterprise-grade automation frameworks. Organizations must integrate multiple additional solutions to achieve comprehensive infrastructure management.

- Standalone Kubernetes Distributions: Provide Kubernetes functionality but require separate infrastructure provisioning, lack physical server automation, omit integrated observability, and lack the comprehensive platform capabilities essential for holistic infrastructure management. These solutions assume underlying infrastructure exists and is managed separately.

- Physical Server Provisioning Tools: Deliver physical infrastructure automation but lack integrated virtualization, Kubernetes orchestration, observability platforms, and comprehensive management capabilities, requiring organizations to integrate multiple platforms and manage complex toolchains.

- Observability Solutions (Standalone Prometheus/Grafana): Require separate deployment, configuration, and integration efforts, adding days to deployment timelines and creating additional operational overhead through fragmented monitoring infrastructure.

Karios uniquely delivers the complete stack, from automated physical server provisioning through virtualization, containerized application orchestration, and comprehensive observability, within a unified platform architecture. This comprehensive approach dramatically reduces operational complexity, eliminates integration challenges, and substantially lowers total cost of ownership while accelerating time-to-value for containerized applications.

Competitive Differentiation

Consider the traditional deployment timeline for a production Kubernetes cluster with comprehensive monitoring:

Traditional Approach (5-8 days):

- Day 1-2: Physical server provisioning, BIOS configuration, OS installation

- Day 2-3: Network and storage configuration, hypervisor deployment

- Day 3-5: Kubernetes cluster planning, control plane deployment, worker node configuration

- Day 5-6: Networking plugin configuration, storage integration, security hardening

- Day 6-7: Prometheus deployment, Grafana configuration, dashboard creation, alert rule definition

- Day 7-8: Integration testing, operational validation

Karios Approach (minutes):

- Automated physical server discovery and provisioning

- Automated infrastructure resource allocation

- One-click Kubernetes cluster deployment with Sidero Labs Omni integration

- Automated networking, storage, and security configuration

- Automatic Prometheus and Grafana deployment with pre-configured dashboards

- Production-ready cluster with high availability, monitoring, and alerting

This transformation from days to minutes represents not merely an incremental improvement but a fundamental paradigm shift in infrastructure operations. Organizations can now deploy fully monitored, production-grade Kubernetes clusters on-demand, experiment with different configurations, and respond to business requirements with unprecedented agility.

Conclusion

The transformation of Kubernetes deployment from a multi-day, expert-dependent process spanning physical server provisioning through cluster initialization and observability configuration to a minutes-long automated workflow represents a fundamental advancement in infrastructure management with Karios as the first hypervisor platform in the world to have fully automated Kubernetes deployment across numerous solutions, including Sidero Labs Omni.

Nov 12,2025

Nov 12,2025 By admin

By admin